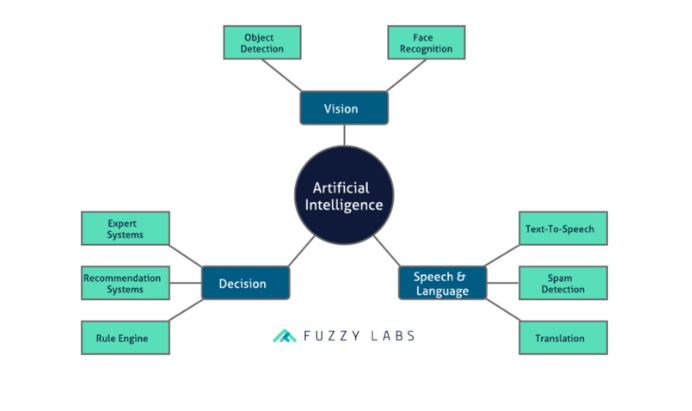

There are a lot of ‘what is AI’ articles out there, and here’s one more. As an AI company it’s almost an obligation for us at Fuzzy Labs to write one.

The History Stuff

Research into AI goes all the way back to the 1950s. It’s an understatement to say computing power was limited, nevertheless some important foundations were laid:

- Automated Reasoning: humans are good at reasoning. We do it all the time, things like figuring out how to get home when a road is closed.

- Natural Language Processing: the dream is computers that understand human language. Imagine asking a computer for directions to Manchester, and the computer doing some automated reasoning to figure out the best route — science fiction in 1950, but quite normal today.

- Robotics: AI-driven smartphones and smart homes were a distant fantasy. Humanoid robots were the future! a robot needs to see, speak and hear, which launched work on computer vision and speech recognition.

The First Winter — 1974 to 1980

In the quest for intelligent machines it made sense to look to real brains for inspiration. Brains are made of big interwoven networks of neurons (nerve cells), so if we simulate these neural networks, we’ll have a thinking computer, just like that!

A great idea but harder than it seemed. In 1969 Marvin Minsky published a book that pointed out some fundamental limitations in a particular kind of neural model called the perceptron. It was a huge blow for AI research. By 1974 optimism for AI had worn out and the funding disappeared.

The Second Winter — 1987 to 1993

In the 1980s computers transformed from vast room-sized monoliths to convenient desktop-sized machines. AI was cool again and saw a number of applications, from its use in video games to provide challenging virtual opponents to businesses adopting expert systems to automate various processes.

This new wave of AI research was largely driven by businesses and by 1993 the money dried up once more.

Semi-modern — 1993 to 2010

Remember spam? In the 90s and early 2000s it drove us all mad. Now it’s a non-problem because of something called a Bayesian classifier. This kind of spam detection learns what spam looks like from human spam reporting.

Neural networks gained popularity again. These are supposed to imitate the networks of nerve cells that make up our brains, and like our brains, neural networks can learn to recognise patterns. Pictures of cats, for instance.

There was renewed interest in optimisation problems. Suppose you’re Amazon and you want to ship as many packages as possible using the fewest vans, or you employ shift workers and you want to fill all shifts at the lowest cost. For these problems it’s often infeasible to find the perfect solution, but we might come up with good enough solutions.

The present day

AI is everywhere. Your inbox automatically categorises emails and populates your calendar when you book a flight, your photos app understands what’s in your photos, airports use face recognition to check your passport.

Today’s proliferation of AI applications results largely from having powerful computers, including mobile devices, but it’s also due to the popularity of cloud computing.

Cloud AI

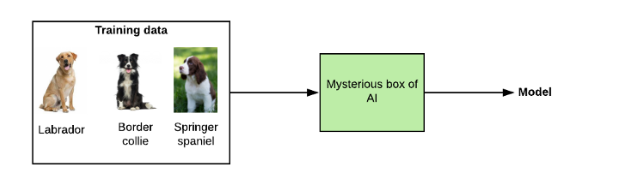

Imagine you want to identify a dog’s breed from a photo. You start by collecting thousands of photos (great way to meet new dogs) and you manually label those photos with the correct breed. Next you feed this data into a mysterious box of AI, which produces something called a model.

The model is the prize you get for all the hard work collecting and labeling data. That model can identify new dogs which it wasn’t trained on.

Training a model to identify dog breeds

Cute, but what’s this got to do with cloud computing?

Google Photos can organise your photos for you. It knows whether a photo is outdoors, and if it contains people or animals. This is because Google have spent years collecting and labeling enormous quantities of photos which they use to train models.

All the major cloud providers offer pre-trained AI models for image classification, text analysis and a lot more. The same model used by Google Photos is available through Google Cloud.

Cloud AI represents a shortcut to having fully-fledged AI capabilities in a product. Using the work others have put into pre-trained models saves a great deal of time and money.

Exotic neural networks

A lot has happened with neural networks since the 50s. Perhaps you’ve heard the term deep learning. This usually means using very large neural networks with very large sets of training data.

Another term that gets thrown around is convolutional neural network. This is a specialised neural network, designed after our own visual cortex, that’s popular for visual applications like self-driving cars or dog recognition.

Ethical considerations

As AI has began to influence people’s lives in significant ways the public discourse has started to emphasise ethics.

If you applied for a job and discovered that an AI is responsible for screening applications, would you trust it? A few years ago Amazon trialed this idea for engineering roles, resulting in bias against women. The AI was trained using historical decisions made by humans, so the computer adopted human biases.

When an AI makes decisions that affect people it’s crucial to consider what biases might affect it.

Organisations like AI for Good UK work to make industry more ethical. In parallel there’s efforts to use AI to do good. Many companies involved in AI have an AI for Good initiative, including our own.

The future

Predicting the future of AI is hard. We’re in an AI boom which may not last forever, but even when the hype lulls we think AI is here to stay. It’s intertwined with the technology we use on a daily basis.

As computing power improves we’ll see more sophisticated AI models, and cloud-based AI-as-a-service offerings will make these models accessible to a wide audience.

Edge computing is leading to new application areas. Edge just means ‘not on the cloud’, but it’s not quite as silly as it sounds. You might want to train a model in the cloud where there’s lots of computing power and deploy that model to a device with limited power and bandwidth. Think about smart cameras monitoring factory equipment.

Another one to keep an eye on is explainable AI. This is the idea that AI should be able to explain itself when it makes a decision. Right now the models we train give us an answer, like ‘that dog is a Border Collie’, but they can’t explain why. Explainable AI addresses some ethical concerns and it also makes it easier to debug and improve our models.

But really, what is AI?

The curious thing is that once a research idea turns into a useful piece of technology, it’s no longer thought of by the general public as being AI. Spam filtering for instance feels so simple while ‘AI’ sounds so sophisticated.

Perhaps what distinguishes AI is the ability to learn and generalise? Ah, but the AI was still programmed to learn. Ultimately it’s just software.