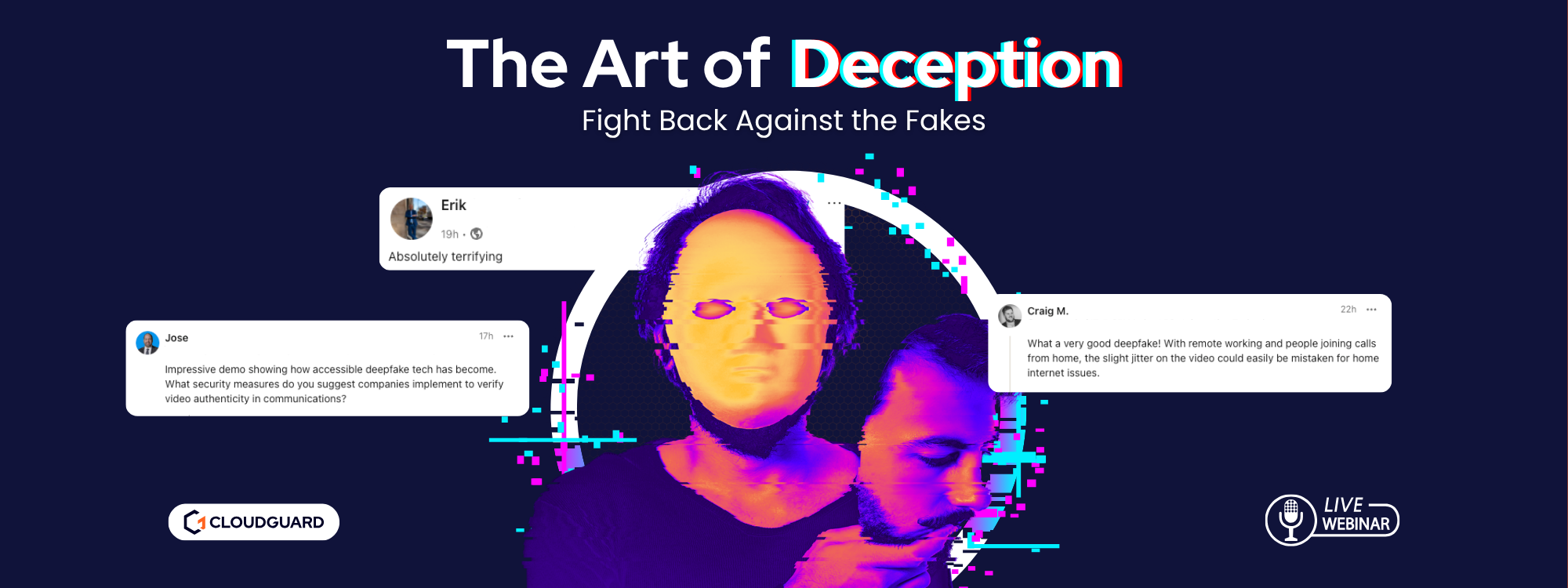

We’ve all seen how easily deepfakes can fool even the most experienced teams. Now it’s time to talk about how to fight back.

Deepfakes and AI-driven manipulation have evolved fast. They’re now 𝐦𝐨𝐫𝐞 𝐫𝐞𝐚𝐥𝐢𝐬𝐭𝐢𝐜 & 𝐚𝐜𝐜𝐞𝐬𝐬𝐢𝐛𝐥𝐞 𝐭𝐡𝐚𝐧 𝐞𝐯𝐞𝐫 and increasingly used to exploit trust.

Attackers can clone voices and fabricate entire conversations using tools anyone can download from places like Hugging Face or GitHub. Traditional awareness training alone can’t keep up.

𝐀𝐫𝐞 𝐲𝐨𝐮 𝐜𝐨𝐧𝐟𝐢𝐝𝐞𝐧𝐭 𝐲𝐨𝐮 𝐜𝐨𝐮𝐥𝐝 𝐯𝐞𝐫𝐢𝐟𝐲 𝐰𝐡𝐚𝐭’𝐬 𝐫𝐞𝐚𝐥 𝐚𝐧𝐝 𝐫𝐞𝐬𝐩𝐨𝐧𝐝 𝐞𝐟𝐟𝐞𝐜𝐭𝐢𝐯𝐞𝐥𝐲 𝐰𝐡𝐞𝐧 𝐬𝐨𝐦𝐞𝐭𝐡𝐢𝐧𝐠 𝐟𝐞𝐞𝐥𝐬 “𝐨𝐟𝐟”?

In this live session, we move beyond basic awareness and into real-world detection and defence. Our cybersecurity experts will demonstrate how today’s deepfakes are used in attacks, what detection tools can (and can’t) do. Plus, the steps organisations should take to reduce risk across people, processes and technology.

𝐃𝐨𝐧’𝐭 𝐣𝐮𝐬𝐭 𝐭𝐫𝐮𝐬𝐭 𝐰𝐡𝐚𝐭 𝐲𝐨𝐮 𝐬𝐞𝐞. 𝐋𝐞𝐚𝐫𝐧 𝐡𝐨𝐰 𝐭𝐨 𝐯𝐚𝐥𝐢𝐝𝐚𝐭𝐞 𝐢𝐭.

Join us live and discover how to detect and defend against the fakes.

𝐖𝐡𝐚𝐭 𝐲𝐨𝐮’𝐥𝐥 𝐥𝐞𝐚𝐫𝐧:

• 𝐋𝐢𝐯𝐞 𝐝𝐞𝐞𝐩𝐟𝐚𝐤𝐞 𝐝𝐞𝐦𝐨𝐧𝐬𝐭𝐫𝐚𝐭𝐢𝐨𝐧 and how attackers weaponise synthetic audio/video in real-world attacks

• 𝐏𝐫𝐚𝐜𝐭𝐢𝐜𝐚𝐥 𝐭𝐞𝐜𝐡𝐧𝐢𝐪𝐮𝐞𝐬 to verify authenticity in real time, even under pressure

• 𝐒𝐢𝐝𝐞-𝐛𝐲-𝐬𝐢𝐝𝐞 𝐝𝐞𝐦𝐨𝐬 of detection tools, showing what they catch and what they miss

• 𝐏𝐨𝐬𝐭-𝐢𝐧𝐜𝐢𝐝𝐞𝐧𝐭 𝐫𝐞𝐬𝐩𝐨𝐧𝐬𝐞 𝐠𝐮𝐢𝐝𝐚𝐧𝐜𝐞:how to contain damage, report internally and prevent recurrence

Why this matters — right now

Scope: Scattered Spider impersonated a Sys Admin employee using sophisticated voice authentication to execute access, lateral movement & a severe ransomware-based attack.

Impact: Impact: M&S have publicly stated the impact will be in the region of £300M, with weekly losses of £40M confirmed during the outage.

When one impersonated voice can cost hundreds of millions, recognising what’s real and what isn’t has never been more important.