Your AI-powered app (mobile or web) might already be illegal in the EU. Since February 2025, specific AI practices have been outright banned in Europe. By August 2026, apps falling into "high-risk" categories will need conformity assessments, quality management systems, and CE markings.

If you're building apps that use machine learning, recommendation engines, or integrate with foundation models like GPT-4 or Claude, this regulation affects you directly.

The Scale of the Challenge

The EU AI Act is the world's first comprehensive legal framework for artificial intelligence. Think of it as GDPR for AI, but more technically complex. It applies to any provider placing AI systems on the EU market, regardless of where they're based.

Building apps in Manchester for EU users? You're in scope.

Features That Must Be Removed Now

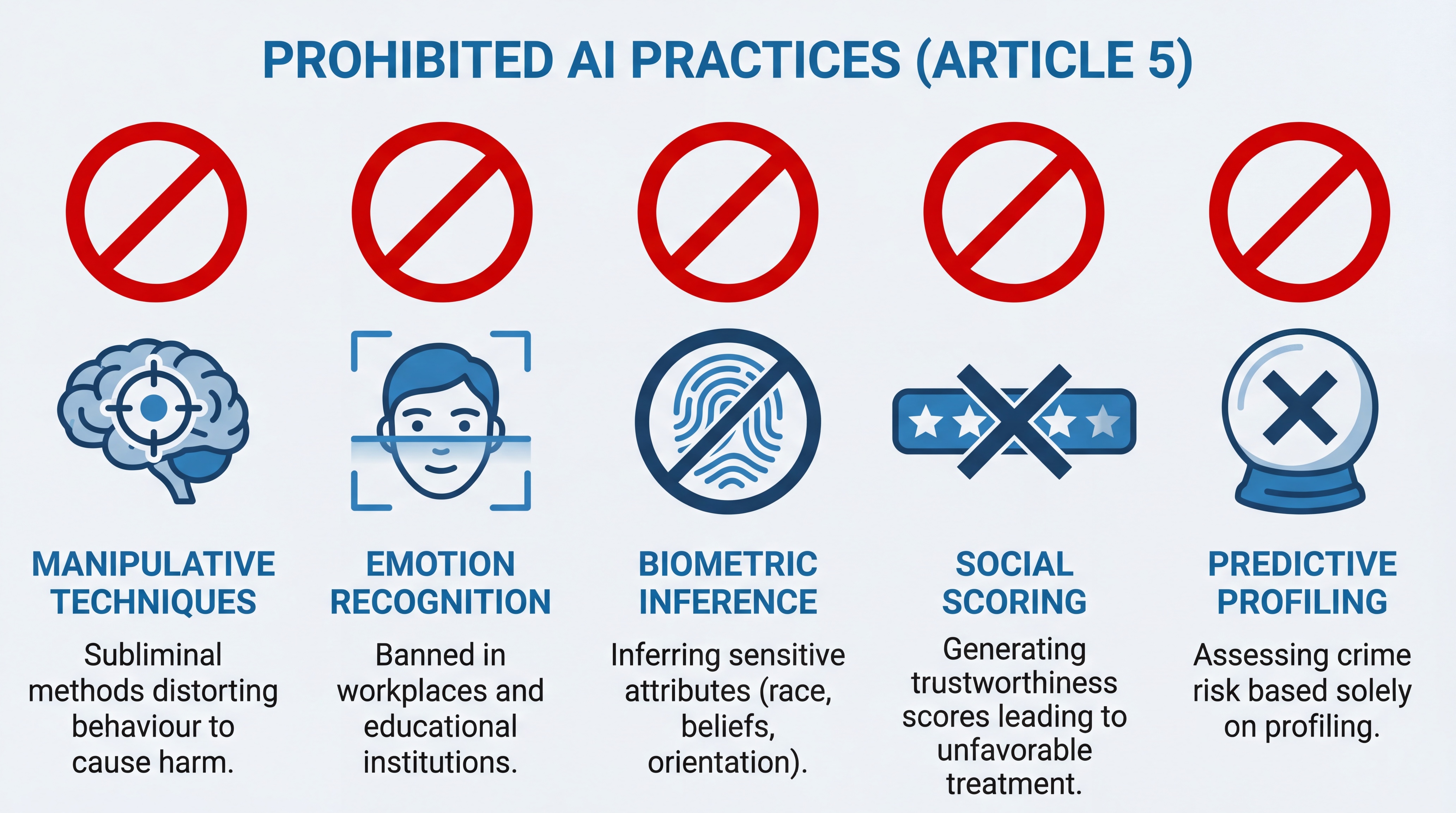

Article 5 establishes outright bans on certain AI practices. These aren't "comply or pay a fine" situations. These features must be deprecated immediately:

Manipulative AI techniques - Systems using subliminal techniques to distort user behaviour in ways that cause harm. This includes notification algorithms optimised to target users at vulnerable moments, or "dark pattern" UX that AI has optimised to exploit psychological weaknesses.

Emotion recognition in workplaces and schools - That "student engagement tracker" using facial analysis? Illegal. HR interviewing tools analysing candidate "sentiment" from video feeds? Also illegal.

Biometric categorisation - Using AI to infer race, political opinions, religious beliefs, or sexual orientation from biometric data is prohibited.

Social scoring - Any system generating a "trustworthiness score" by aggregating disparate data sources leading to unfavourable treatment is banned.

Is Your App "High-Risk"?

This is where most app developers will feel the impact. Annex III lists specific use cases that trigger "high-risk" classification regardless of your underlying technology's complexity.

Education and Vocational Training - Systems determining access to education, assigning students to institutions, or evaluating learning outcomes. That "AI Tutor" adapting curriculum based on performance? If its output formally evaluates outcomes, it's probably high-risk.

Employment and Workers Management - Recruitment tools (CV parsing, candidate ranking) and systems for task allocation or performance monitoring. This is significant for gig economy apps. If your algorithm assigns rides, deliveries, or tasks to workers, you're operating a high-risk system.

Credit and Insurance - AI-powered credit scoring and life/health insurance risk assessment. Your "Buy Now, Pay Later" app using AI to determine credit limits? High-risk.

Critical Infrastructure - AI components managing road traffic, water, gas, heating, or electricity supply. Building apps that optimise HVAC systems? If failure could disrupt heating supply, you're likely high-risk.

The Third-Party API Trap

Most modern apps are wrappers around third-party APIs. OpenAI, Google Vertex AI, Anthropic's Claude. The EU AI Act makes this relationship complicated.

If you use a generic "Sentiment Analysis API" to sort customer feedback internally, your obligations are limited. But if you take a foundation model, fine-tune it on medical data, and release "Dr. AI" as a diagnosis assistant, you're the Provider of a High-Risk AI System. Not the API provider. You.

You become fully responsible for conformity assessment, data governance, and risk management of the entire system, including the base model you don't control.

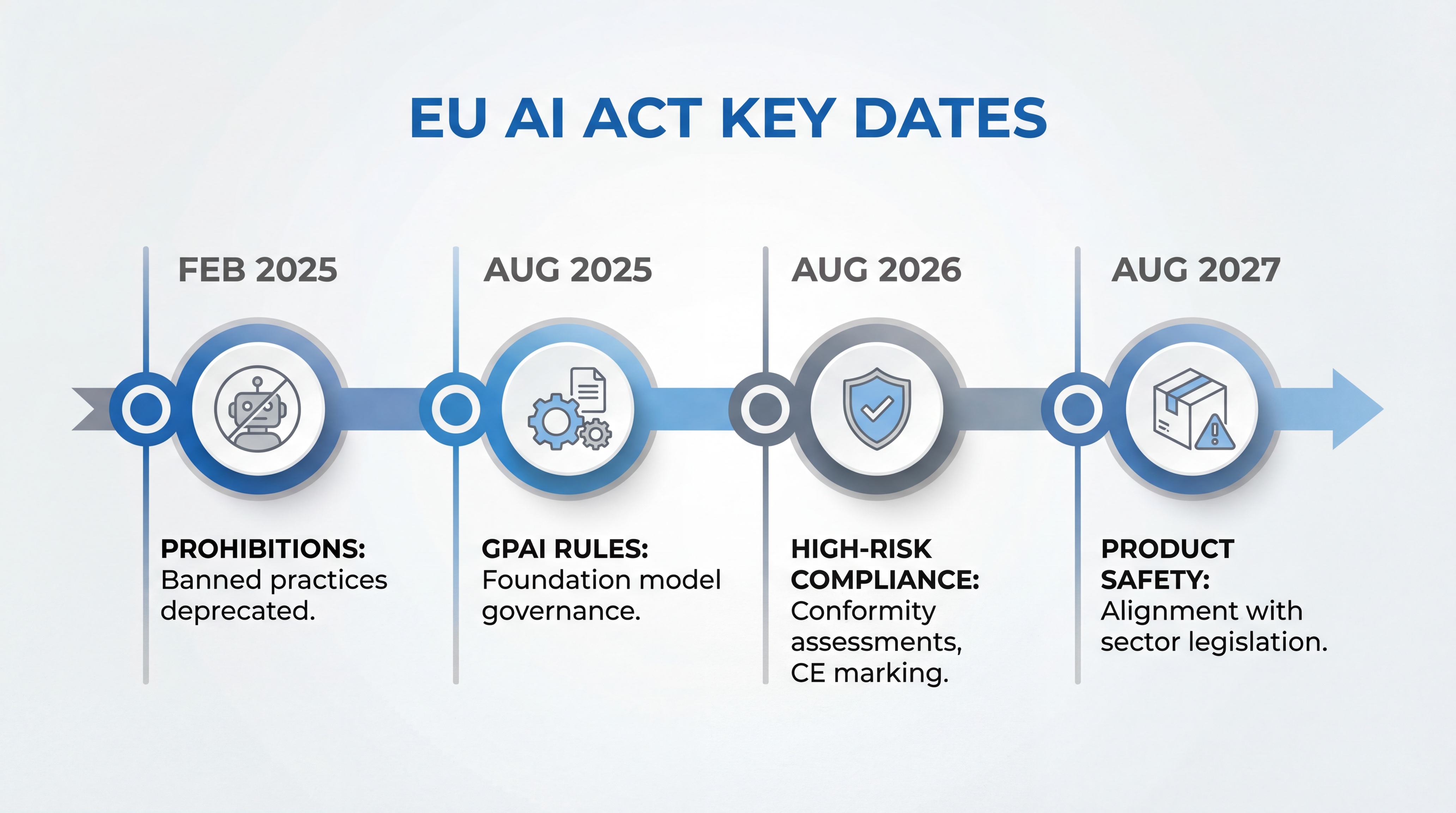

The Timeline

| Date | What Happens |

|---|---|

| Feb 2, 2025 | Prohibitions in force. Banned features must be deprecated. |

| Aug 2, 2025 | GPAI governance. Foundation model providers must publish training data summaries. |

| Aug 2, 2026 | High-risk compliance. Full conformity assessments, QMS, CE marking required. |

| Aug 2, 2027 | Product safety alignment for AI in regulated products. |

What Should You Do Now?

- Audit current features against the prohibited practices list. If anything matches, deprecate it immediately.

- Classify your systems using the Annex III criteria. Know which apps are high-risk.

- Review third-party dependencies. Understand your Provider vs Deployer status for each AI integration.

- Start logging infrastructure work. The logging requirements take time to architect properly.

- Document intended purpose narrowly. Get legal and product teams aligned on precise language.

The August 2026 deadline for high-risk systems isn't far away. Eighteen months sounds comfortable until you factor in development cycles, testing, and conformity assessments.

Need Help Navigating This?

The EU AI Act represents a significant shift in how AI-powered apps must be designed, documented, and operated. If you're unsure how your current or planned mobile app fits within these regulations, or need help architecting compliant systems from the ground up, we can help.

Read our full technical guide: The EU AI Act: What App Developers Actually Need to Know

Discuss your specific situation: Get in Touch

About Foresight Mobile

Foresight Mobile is a Manchester-based mobile app development agency specialising in Flutter, iOS, and Android applications. We help businesses transform ideas into successful mobile products through our structured App Gameplan discovery process and full-cycle development services. Our clients range from startups to enterprises including Bodybuilding.com, Levi's, and Wall Street English.