This is the second post in a series about how to build a data-driven organisation. The first was about why anyone should be interested. This goes more into the problem of data and therefore the end purpose of a data-driven organisation. As tradition dictates, we will redefine the topic to better describe our gist. Here it is from ‘data-driven organisations’ into ‘informed learning organisations’ and to explain how this distinction will be important in these posts.

Future posts will get into the plan of topics covering areas of an organisation, tools and techniques that can be applied to generate collaborative system-level understanding and then finally end with examples and applications of the ideas – or at least analysis of semi-fictional case studies through these lenses.

What is a Data-Driven Organisation?

Simply put, an organisation that uses data to inform its decision-making processes – this is very different from the concept of ‘open data’ which involves publishing data sets for others to consume in innovative ways.

The general definition of a data-driven organisation will be something like:

‘making decisions offering competitive advantage via gathering data from all areas of an organisation and empowering employees to make them from data-related analysis’.

This is absolute undirected nonsense that will only increase the amount of directionless work and hamper the organisations ability to deliver additional value because it does nothing to address the real problem. Organisations lack awareness of what their problems are, awareness of where those problems are and an awareness of how to build a strategy to tackle them. If this was not the case, they would already be measuring things and making informed decisions.

The focus in these posts will be to outline a strategy that not only enables data-driven organisations to grow and run experiments on themselves but to also figure out what changes work and provide data to feed into a generation of new testable hypotheses.

The Problem of Data

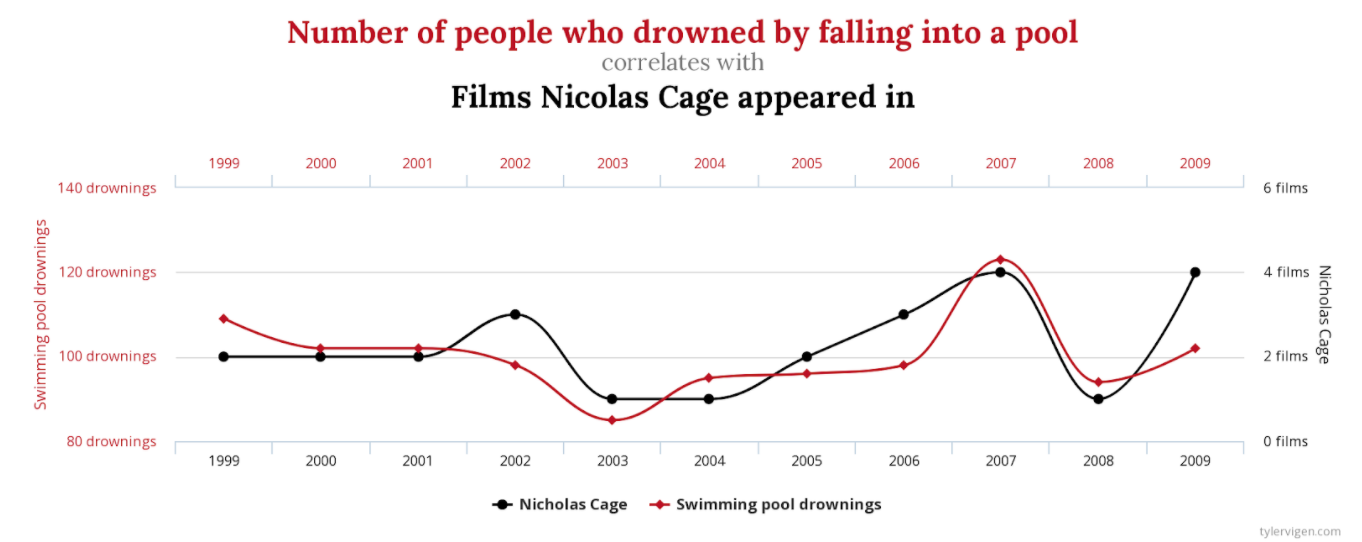

The problem with ‘data’ is that there is an effectively infinite amount of it for any given moment in time – and a tiny infinitesimal amount of this can be captured. Even more frustratingly that tiny amount that you can capture is still effectively infinite relative to your analytic prowess. Then, when you have reduced your input to a vaguely useful dataset and trawl it for a pattern, you will fall victim to finding all the coincidences that just happened to create a strong signal rather than any real insight. I.e. you simply create fiction from noise. This is the basis of any correlation is not causation argument – you find what you look for.

For instance

http://www.tylervigen.com/spurious-correlations

I find this concerning because I don’t recall seeing Nicholas Cage swimming in any films except maybe that one with Shaun Connery and Alcatraz. Maybe method acting causes drowning?

This is the most counterintuitive thing about data. It is merely events – it does not capture causation unless very special and hard to control conditions are met. So we need something completely different. The problem is the result of both processes looks the same – and it is the same unless you happen to know a lot about how it was captured.

Therefore saying an organisation is driven by data is pretty meaningless (or even damaging) unless we start to develop our ideas. Data is noise, it is what is streamed off sensors, something ‘magic’ then happens and the output of that is what informs the actuators of the organisation. Getting to that magic requires logic, hypothesising and experimentation. It also requires accepting that true (deductive) causation is impossible and so letting data reshape the system of beliefs is essential. This is achieved by constantly testing our judgements against the new reality – we need abduction.

Which leads to the core problem here: intelligence and learning.

We know we cannot deduce causality and we also know that we do not want to merey infer causality because correlation is not causation. We want to start to build systems of organisation that abduct causal relations by testing hypotheses based upon data – later we can then start to build heuristics to simplify decision making in the future.

Intelligence + Learning: Neural Nets – the problem.

This is a specific example of the kinds of logical problems we face – this might get briefly a bit technical, bear with!

The generalism above is so abstract it actually forms the basis of neural nets – possibly the most abstract generalisation of a system that does ‘stuff’.

Neural nets literally come from the hypothesis

‘What if we build a system that has sensors connected to actuators that can do things. What if it can feedback a score back into itself to adjust the weightings of bits of information in order to do something different – can that system do something predictable / stable?’

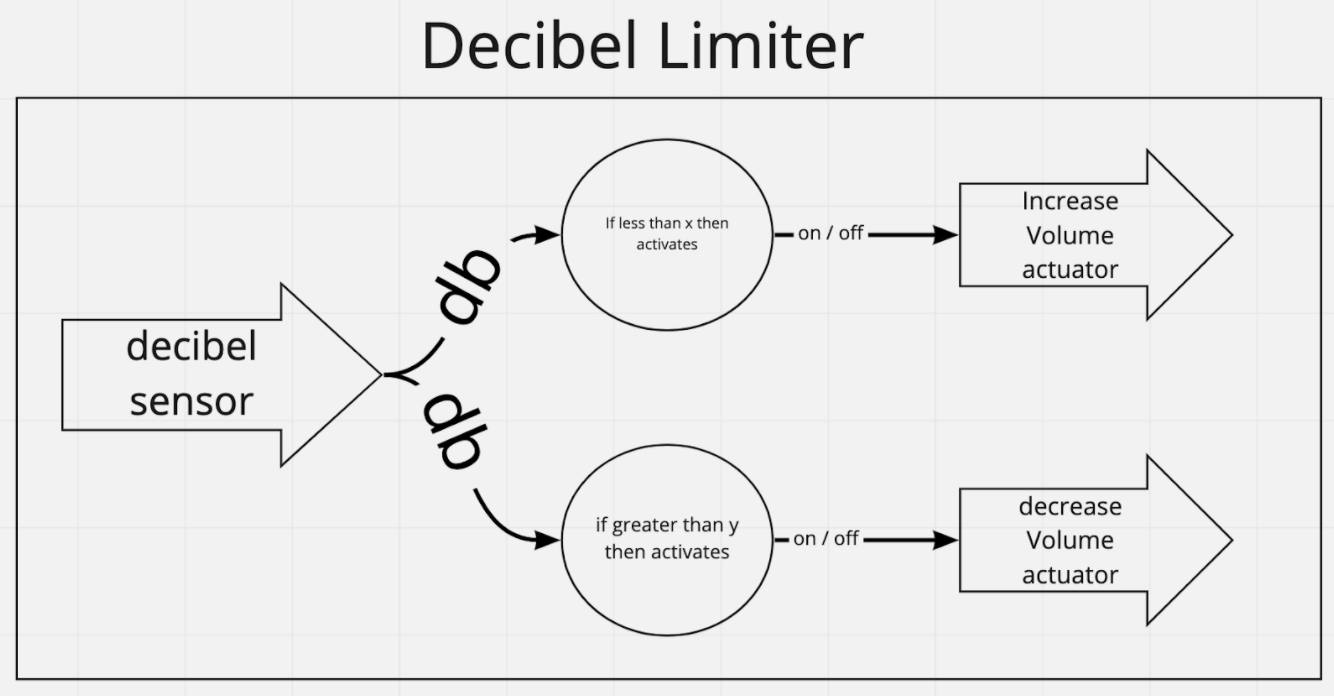

Yes, for instance, it is quite easy to build something that turns on when a sensor goes beyond a threshold and then train a system to do this – it would be in effect moving a lever.

The taking of input applying a weighting and deciding whether to output a signal is the basis of the neuron. More complex neurons can have multiple inputs and produce an output based on some weighting across them – but hopefully it isn’t a big leap to see how that could be seen as a clump of many very simple ones working together.

Then, given that we think applying logic to predictable behaviours for given inputs can produce more complex behaviours – what happens if we layer these things, connect them all up and let them feedback on themselves to adjust their own weightings? Now we can start trying to solve problems with many many inputs and many many outputs by scaling the number of nodes. Join them up large vertical lines with each vertical node connected to each node in the next vertical, ‘just’ train the system and magic happens.

This gross simplification of the story has far more truth in it than many would like to admit. AI success is due to computation costs and feedback loop costs of running those neurons dropping rather than some huge conceptual breakthroughs.

The Catch

The catch is that all the work in getting these things to work goes into carefully assembling a training data set. Especially when complex – like image recognition. This data set will undergo iterations and refinements throughout the process of getting a network to behave. Also, it is required to get another set of data and categorise that in order to test the network against afterwards – this is a scoring algorithm that rates success so that it can be compared to other versions. The actual scorer is essentially people manually verifying because people do the categorisation.

Why are ‘Algorithms’ Bias?

There is a lot of very careful selection happening here – there is also a lot of human intervention in selecting the data. This is why neural nets are bias (Eg Highly racially bias) – they contain not only the bias of the people selecting the data but also the innate bias that exists in the data sets of the world. Best of all these networks are very good at finding the cracks in your rules.

Anne Ogborn (expert in symbolic AI) told me several stories of how this goes wrong. For instance, when trying to evolve something that can walk from a set of hinged limbs (Eg a biped or a spider). If you allow it to change the length of hinged limbs then you will find that it will exploit the vagueness. It will probably generate a single long vertical limb that simply falls over and crosses the finish line. You are required to constrain the maximum length on the limbs to avoid this.

Another cautionary tale (unsure of how true) was a military net designed to identify camouflaged tanks. It was 100% successful in test and 0% in wild. It turned out each picture with a tank had some marking that was hard for humans to spot. So they built a machine to spot that marking by mistake.

We Need Something Dynamic – Continuous Judgements

So even convolutional neural networks don’t do magic, there is a lot of very careful planning, analysis, categorisation, iteration and refinement that goes into building a working ‘Self Learning Artificial intelligence’ (which at the end is more like a stereo than a brain because its response is known given a set of known inputs – its behaviour is baked in and no longer changes).

So state of the art AI is never going to solve – unless you already know the problem can be scored and answered through logic. However a great many are known not to be. We need something like continuous judgement (and refining judgement abilities) because our environment and its needs change at a very rapid pace.

Hopefully, over the course of these posts, you will come to agree that the dominant cost of software isn’t its development cost, it is the failure to capitalise on opportunity, and this is often based on timings in the external world.

What does the solution look like?

The reader is, therefore, fairly audacious in wanting to build such a system of systems with neurons as complex as people in a changing environment!

This is what this series of posts is going to get into: unpacking a lot of different fields and joining them together into a framework that is directed into the heart of the problem

Really, these are Informed Learning Organisations. Starting from data will get nobody anywhere – unless it is very carefully controlled in how it is both generated and captured at which point it is really information. We want to refine this further into developing methods to know where to look to find useful information – otherwise we generate a horrendous amount of probably meaningless undirected work. We need to move as a graceful whole.

Grace involves minimalism, getting to grace requires method, practise, continual improvement and self reflection but most of all a relaxed naturalness that can only come from a strong core.

As such we will develop an approach that enables ignoring the vast sea of data to pick out the areas that will give the most return in a measurable way. It turns out that – contrary to the initial conception, the goal of a data-driven organisation is not to measure all the sources of data – it is to narrow down to measuring the few things that matter at the right time.