The LangChain framework has different types of chains including the Router Chain. Router Chains allow to dynamically select a pre-defined chain from a set of chains for a given input. In this blog we are going to explore how you can use Router Chains.

The second LangChain topic we are covering in this blog are callbacks. MultiPromptChain and LangChain model classes support callbacks which allow to react to certain events, like e.g. receiving a response from an OpenAI model or user input received.

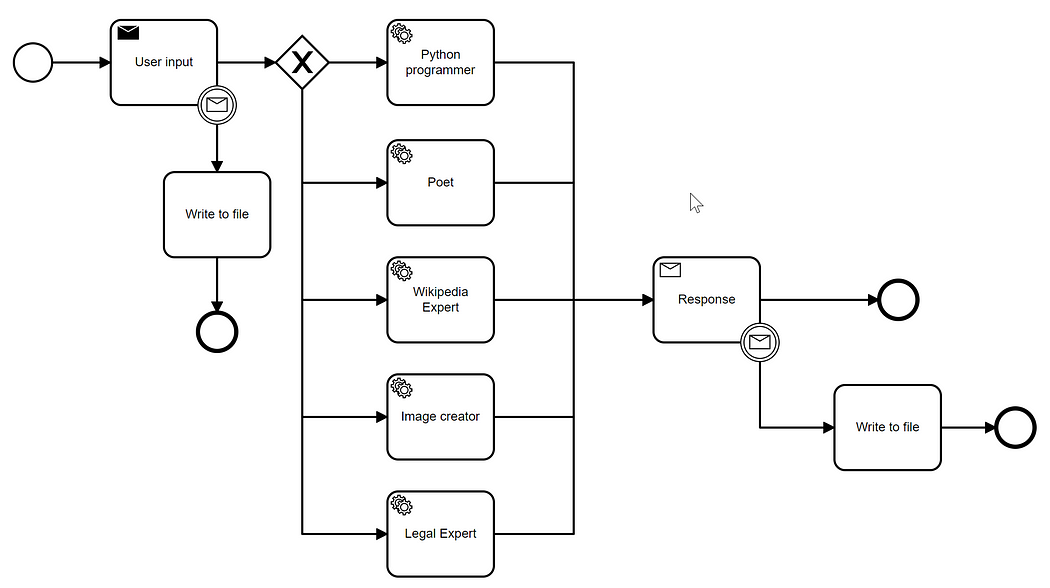

RouterChain Example Flow

A typical Router Chain base workflow would receive an input, select a specific LLMChain and give a response. In this blog we are going to explore how you can implement a workflow that can be represented by this diagram:

The following actions are executed here:

- The user produces a text based input.

- The input is written to a file via a callback.

- The router selects the most appropriate chain from five options:

- Python programmer

- Poet

- Wikipedia Expert

- Graphical artist

- UK, US Legal Expert - The large language model responds.

- The output is again written to a file via a callback.

Example Flow Implementation

We have implemented the workflow depicted above using Python as a simple command line application which generates an HTML file with the output.

The code which implements the workflow depicted above is in this Git repository:

GitHub - gilfernandes/router_chain_playground: Project dedicate to explore the capabilities of…

Project dedicate to explore the capabilities of LLMRouterChain - GitHub - gilfernandes/router_chain_playground: Project…

We have used a Conda environment which you can setup using these commands:

conda create --name langchain python=3.10

conda install -c conda-forge openai

conda install -c conda-forge langchain

conda install -c https://conda.anaconda.org/conda-forge prompt_toolkitMake sure that you add the `OPENAI_API_KEY` environment variable with the OpenAI API Key before running the script.

The script can be executed with this command:

python ./lang_chain_router_chain.pyExample Execution

Using “gpt-3.5-turbo-0613” we used the following inputs which were handled by the specified LLM chains:

- Can you implement the sigmoid function and its derivative in Python?

- python programmer - Can you implement the ReLU activation function in Python and its derivative too?

- python programmer - Can you generate an image with the output of a sigmoid function?

- graphical artist - Which activation functions are commonly used in deep learning?

- python programmer - Can you write me a poem about the joys of computer programming in the English country side?

- poet - What are the main differences between the UK and US legal systems?

- legal expert - Can you explain to me the concept of a QBit in Quantum Computing?

- wikipedia expert

Here is a transcript of the interaction with the model.

Implementation Details

We have created two scripts:

The main script is lang_chain_router_chain.py and the implementation of the file writing callback is FileCallbackHandler.py.

The main script lang_chain_router_chain.py executes three steps:

- Define a list of LLMChain’s with corresponding prompts

def generate_destination_chains():

"""

Creates a list of LLM chains with different prompt templates.

"""

prompt_factory = PromptFactory()

destination_chains = {}

for p_info in prompt_factory.prompt_infos:

name = p_info['name']

prompt_template = p_info['prompt_template']

chain = LLMChain(

llm=cfg.llm,

prompt=PromptTemplate(template=prompt_template, input_variables=['input']))

destination_chains[name] = chain

default_chain = ConversationChain(llm=cfg.llm, output_key="text")

return prompt_factory.prompt_infos, destination_chains, default_chainThe prompt information is in this part of the script:

class PromptFactory():

developer_template = """You are a very smart Python programmer. \

You provide answers for algorithmic and computer problems in Python. \

You explain the code in a detailed manner. \

Here is a question:

{input}"""

poet_template = """You are a poet who replies to creative requests with poems in English. \

You provide answers which are poems in the style of Lord Byron or Shakespeare. \

Here is a question:

{input}"""

wiki_template = """You are a Wikipedia expert. \

You answer common knowledge questions based on Wikipedia knowledge. \

Your explanations are detailed and in plain English.

Here is a question:

{input}"""

image_creator_template = """You create a creator of images. \

You provide graphic representations of answers using SVG images.

Here is a question:

{input}"""

legal_expert_template = """You are a UK or US legal expert. \

You explain questions related to the UK or US legal systems in an accessible language \

with a good number of examples.

Here is a question:

{input}"""

prompt_infos = [

{

'name': 'python programmer',

'description': 'Good for questions about coding and algorithms',

'prompt_template': developer_template

},

{

'name': 'poet',

'description': 'Good for generating poems for creatinve questions',

'prompt_template': poet_template

},

{

'name': 'wikipedia expert',

'description': 'Good for answering questions about general knwoledge',

'prompt_template': wiki_template

},

{

'name': 'graphical artist',

'description': 'Good for answering questions which require an image output',

'prompt_template': image_creator_template

},

{

'name': 'legal expert',

'description': 'Good for answering questions which are related to UK or US law',

'prompt_template': legal_expert_template

}

]- Generates the router chains from the destination and default chains.

def generate_router_chain(prompt_infos, destination_chains, default_chain):

"""

Generats the router chains from the prompt infos.

:param prompt_infos The prompt informations generated above.

:param destination_chains The LLM chains with different prompt templates

:param default_chain A default chain

"""

destinations = [f"{p['name']}: {p['description']}" for p in prompt_infos]

destinations_str = '\n'.join(destinations)

router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(destinations=destinations_str)

router_prompt = PromptTemplate(

template=router_template,

input_variables=['input'],

output_parser=RouterOutputParser()

)

router_chain = LLMRouterChain.from_llm(cfg.llm, router_prompt)

return MultiPromptChain(

router_chain=router_chain,

destination_chains=destination_chains,

default_chain=default_chain,

verbose=True,

callbacks=[file_ballback_handler]

)- Loop through user input, which allows to save the output.

while True:

question = prompt(

HTML("<b>Type <u>Your question</u></b> ('q' to exit, 's' to save to html file): ")

)

if question == 'q':

break

if question == 's':

file_ballback_handler.create_html()

continue

result = chain.run(question)

print(result)

print()The FileCallbackHandler.py overrides langchain.callbacks.base.BaseCallbackHandler.

It implements

def on_chain_end(self, outputs: Dict[str, Any], **kwargs: Any) -> None:

def on_text(self,text: str, color: Optional[str] = None, end: str = "", **kwargs: Any) -> None:to capture the LLM output and the user input.

It also provides a method to generate HTML files:

def create_html(self)Conclusion

LangChain provides convenient abstractions to route user input to specialized chains. In a sense you can produce chains with different “hats” which are chosen to respond due to their specialization.

Furthermore LangChain also has a powerful callback system which allows you to react to internal events fired by the chains.